Table of Contents

Natural Language Processing - Discourse Processing

Intro: Basic Notions

A Discourse is not just a set of sentences, it is primarily intended to convey information about something to the listener or reader so that both parties can understand each other.

Assuming that discourse's sentences are constructed in a grammatically correct way, in this Assignment we will learn about the properties of Discourse studied within Natural Language Processing, which ensure that a Discourse is correctly processed by human beings.

To achieve that, linguistic units need to manifest both of these properties between them:

- Cohesion: how textual elements are linked together through cohesive elements such as anaphors (she, he..), synonyms etc. The bold words refer to the same antecedent (Roberto).

- Roberto crashed his car. He has already fixed the broken door of his car.

- Coherence: that is about it making sense (how units are mixed to produce the overall meaning of a discourse)

- Roberto crashed his car. He has already fixed the broken door of his car.

- Roberto crashed his car. His uncle is taller than his father.

If these two properties are satisfied, the listener or the reader is able to correctly incrementally construct a mental model called discourse model containing all the representations of the text entities, with their properties and relationships between them.

To satisfy the two previous properties so that a set of sentences can be a well defined Discourse in this assignment I will talk about two important areas that study coherence and cohesion and for each of them I will give you examples and in particular for the coreference section I will show you an important resolution algorithm (at the bottom you will find the guide on how to reproduce it on your PC).

Coreference

In the real world the coreference is one of the most important component of a discourse and humans in many cases use it when they speak without being aware.

What is Coreference? To explain it let's use this example:

John plays the guitar very well, he probably took lessons in the past. Every saturday, the guitarrist meets his other friends.

In this example:

- John, he and the guitarrist are mentions or referring expressions

- John is the referent

The term coreference derives from the verb to co-refer that happens when more “mentions” (he, the guitarrist) are used to refer to the same “referent” (John). In the previous example [“John”, “he”, “the guitarrist”] corefer.

Anaphora

A common linguistic phenomenon that we use frequently is the anaphora that is a reference to a specific entity that has been previously introduced into the text so is acquired on the basis of the discourse knowledge. The reader (or the listener) can interpret the meaning of the current phrase on the basis of the preceding sentences. Example:

- Maria is a professional pianist. She started playing the piano since she was 4 years old.

Maria is the antecedent and she is the anaphor.

Cataphora is the reverse process that means, considering the example below, the referent anaphor (John) precedes the antecedent (his).

Example:

- After his guitar lesson, John will go to home.

Notice that not all anaphoric relations are coreferential, despite most of the time they are. Example:

- John went to see a concert yesterday. The tickets were very expensive.

The tickets is the anaphoric because the interpretation depends on the previous word concert but is not an identity relashionship, that means that The tickets and concert are different entities in real world so they are not coreferential.

Mention Types

In this table are listed all mention types which can occur in an anaphoric phenomenon.

| Mention Types | Description | Components | Example |

|---|---|---|---|

| Indefinite Noun Phrases | Generally introduces entities that are new to the hearer | a, an, some | “Peter saw a strange person in the park” |

| Definite Noun Phrases | Refers to an entity that has been previously mentioned | the | “This Ferrari is the only white one left in the shop. The price is around 500 thousand euros for the white car” |

| Pronouns | Used for entities that are the most salient in the discourse and they are very useful to avoid boring repetitions of the referent in the discourse | he, she, it, his, her, him | Camilla used to study at her University study rooms instead of her house. She says she focuses in a distraction-free place |

| Demostrative Pronouns | Can be indefinite or definite | this, that | I saw this beautiful flower today |

| Zero Anaphora | It often happens in many Latin languages such as Spanish, Italian or including Japanese or Chinese. In these languages the pronoun is often missing in the sentence following the one in which the anaphor is introduced | - | “Ludovico uscì a far la spesa in auto. Nel tragitto [he is missing] fece benzina” |

| Names | Used most of the time as referent when the name introduce a new entity in the discourse. Some time they also refer to an old entitiy already introduced in the text. | <names> | William Shakespeare was an English poet considered the greatest writer in the English language and the world's greatest dramatist |

Properties of the antecedent-anaphor pair

This table shows the main properties in the anaphora context between antecedent and his anaphor. Some of these properties will then be useful within the Hobbs's anaphora resolution algorithm that will be explain in the next paragraphs.

The pair = means both antecedent and anaphor.

| Property | Description | Example |

|---|---|---|

| Number Agreement | The pair must agree in terms of number. She,her,he,him,his,it are singular, instead, we,us,they,them are plural. You is unspecified. | Correct: Soft Inc. is a software company. They develop a new application that monitor user health. Incorrect: In the 1930s, 30% of the inhabitants of the XXC island were poor. Now, 90% of his have an average salary of $ 32,000 per year. |

| Gender Agreement | Nouns and pronouns have a grammatical gender: male (Robert,John,he,his,him), female (Lucy,Elisabeth,she,her) and finally the nonpersonal(it) | Jennifer eats in this famous restaurant. She is exciting. (she=Jennifer) Jennifer eats in this famous restaurant. It is exciting.(it=the restaurant) |

| Recency | Elements introduced in recent sentences are more relevant than those insert into old utterrances. | Mary ordered a big pizza. Jeremy chose to eat only a salad. It was a healthier meal |

| Person Agreement | Basically, a pronoun's antecedent must agree with the pronoun in terms of person. | Correct: Tom is a little cat. He likes to eat fish every day Incorrect: Pamela teaches music in a school. He likes to organize her lessons by mixing theory and practice. |

| Binding Theory Constraints | Reflexive pronouns corefer with the candidate referent of the most immediate lexical section that contains them. | Marco observes himself in the mirror. [himself=Marco] Marco observes him in the mirror. [him=Marco] |

| Grammatical Role | In terms of relevant order, entities insert in subject position are more relevant than those in object position | George went to the cinema with Robert. He chose the movie. [He=George] Robert went to the cinema with George. He chose the movie. [He=Robert] |

Anaphora Resolution

Anaphora Resolution (AR) is simply a solution that identifies the antecedent of a anaphor with a certain precision based on factors that can be constrains that, for instance, remove a set of candidate noun phrases because do not satisfy certain requirements. For some algorithms (i.e Hobbs algorithm), the selection criteria is based on the fact that the candidates must comply the properties listed on the previous table. AR algorithms are grouped by the methodology in which they operate:

- Rule-based approach

- Machine Learning (ML) approach

Nowadays the ML-based approach has had enormous popularity BUT considerable number of studies (Barbu 2001; Preiss 2002a; Stuckardt 2002;2004;2005) claims that ML don't necessarily perform better than traditional rule-based approaches.

Hobbs's algorithm has 88% accuracy tested in correct parsed sentences.

Hobbs's algorithm

The Hobbs solution (1978) is a syntax-based tree-search algorithm that operates on already parsed sentences. It was originally created for 3rd person anaphoric pronouns only.

The algorithm has 2 inputs: the anaphor (a pronoun) and the text's tree containing the antecedent that will be identified. The algorithm assumes that the input sentence tree is correctly parsed otherwise it will not be able to correclty solve the problem. Thus the effectiveness of the algorithm depend on the grammar (parser) used to elaborate the sentence's tree.

The algorithm do not consider how to find the effective grammar of the sentence that will be elaborated. To fulfill this requirement in this implementation, we will adopt the StanfordCoreNLParser parser that is a module able to determining the syntactic structure of a text by analyzing its constituent words.

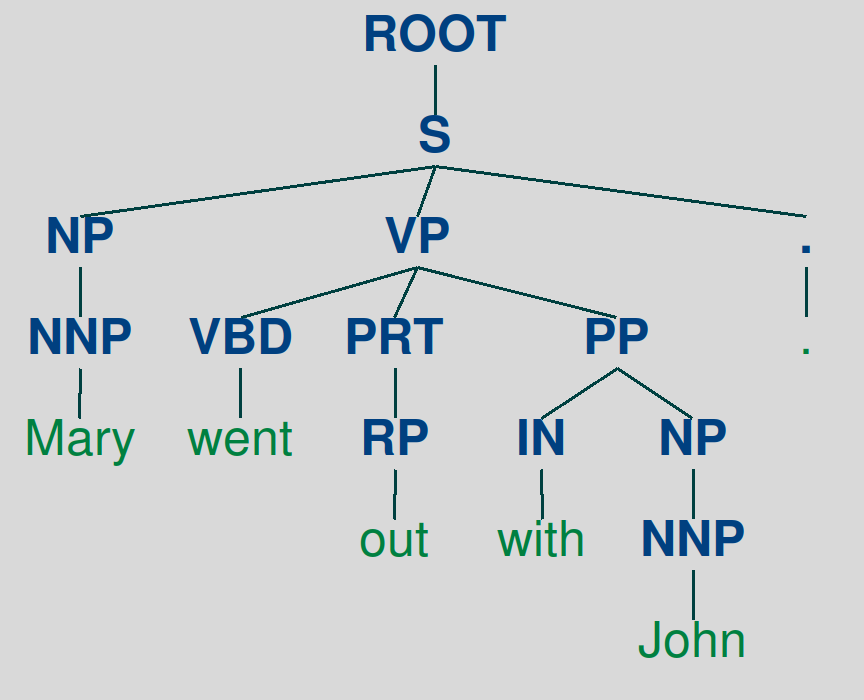

The algorithm uses Treebank tags that are converted into NLTK Trees.

In linguistics, a treebank is a parsed text corpus (a bank of linguistic trees) that annotates syntactic or semantic sentence structure.

The corpus in a Treebank is annotated with part-of-speech (POS) tags, that is, marking up every single linguistic item of a text that have similar grammatical properties.

In the following table, are listed some of the POS tags for the English corpus.

Description of the algorithm

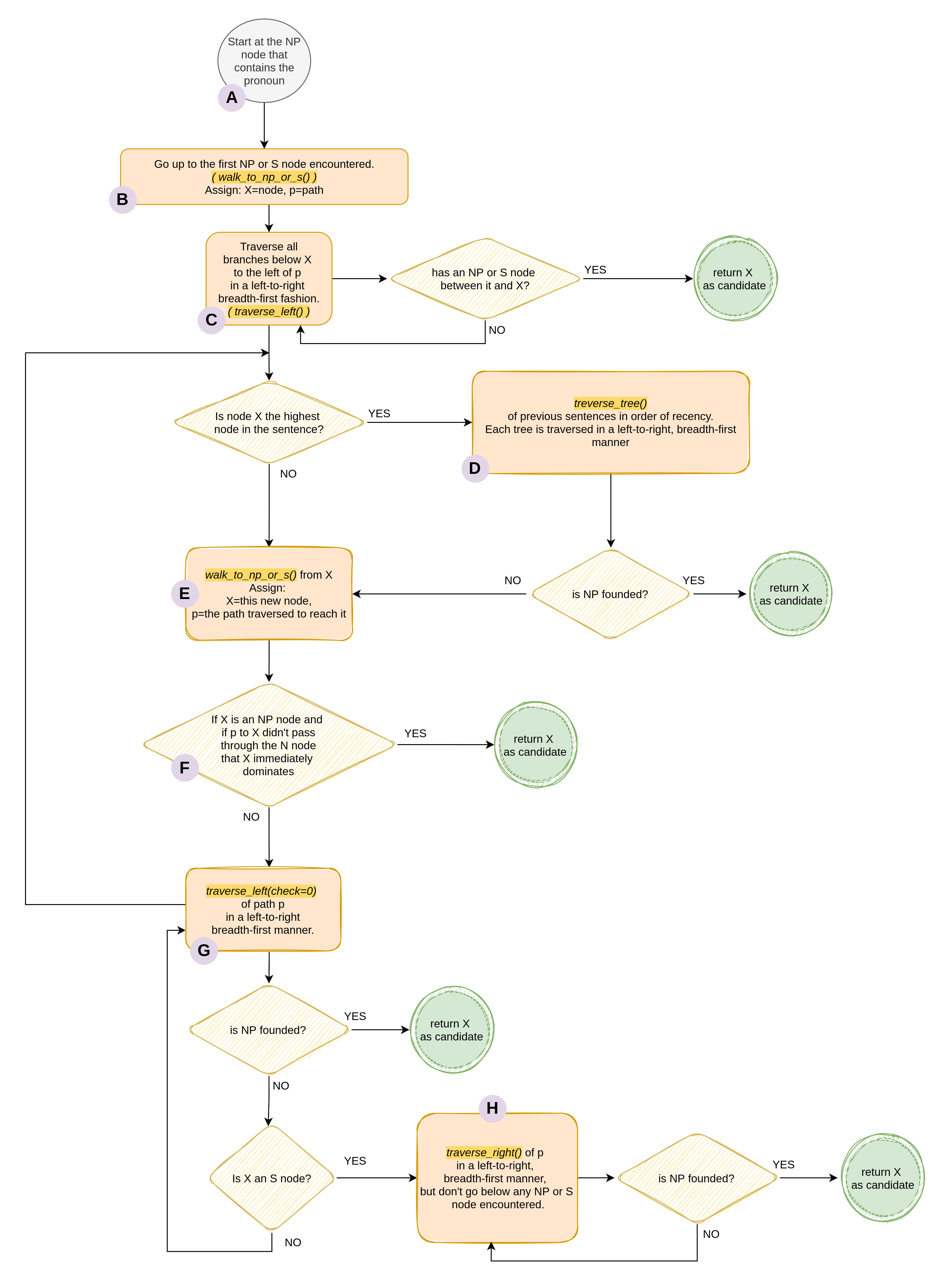

The steps of the Hobbs's algorithm are described in the following flowchart and each letter corresponds to each step of the algorithm below the chart:

In this implementation I consider the only case of the English language. All possible pronouns are stored within vectors and will then be used for comparison.

reflexive_pronouns = ["Himself", "himself", "Herself", "herself", "Itself", "itself", "Themselves", "themselves"]

pronouns = ["He", "he", "Him", "him", "She", "she", "Her", "her", "It", "it", "They", "they"]

pronoun_numbers = {

"NN": "singular",

"NNP": "singular",

"he": "singular",

"she": "singular",

"him": "singular",

"her": "singular",

"it": "singular",

"himself": "singular",

"herself": "singular",

"itself": "singular",

"NNS": "plural",

"NNPS": "plural",

"they": "plural",

"them": "plural",

"themselves": "plural",

"PRP": None

}

male_p = ["he", "him", "himself"]

nominal_labels = ["NN", "NNS", "NNP", "NNPS", "PRP"]

female_p = ["she", "her", "herself"]

neuter_p = ["it", "itself"]

This is the first function to be called by the program and, as you can see, there are two possible ways to process the input text. As already mentioned, through the use of the StanfordCoreNLP module that parses the sentences OR by providing the text in the form of a Treebank tag tree. In the first case, due to the fact that the parser returns a list of possible trees for the input string, for simplicity, in this implementation we will always consider the first proposed result.

if __name__ == "__main__": if len(sys.argv) < 4 or len(sys.argv) > 5: print('\033[91m' + "Parameters Error" + '\033[0m') exit(-1) else: if sys.argv[1] in ["--core-nlp", "-c"]: parser = CoreNLPParser(url='http://localhost:9000') trees, sentences = None, None if sys.argv[2] in ["--file", "-f"]: sentences = read_from_file(sys.argv[3]) elif sys.argv[2] in ["--string", "-s"]: sentences = sys.argv[3].split(". ") else: print('\033[91m' + "Parameters Error" + '\033[0m') exit(-1) # for simplicity it always choose the first proposed result trees = [list(parser.raw_parse(s)).pop() for s in sentences] hobbs.resolve(sys.argv[4], trees) elif sys.argv[1] in ["--treebank-tags", "-t"]: trees = get_trees(sys.argv[2]) hobbs.resolve(sys.argv[3], trees) else: print('\033[91m' + "Parameters Error" + '\033[0m') exit(-1)

If the parameters are correct, the previous function calls the function below which has the purpose of finding the exact position of the pronoun (to be solved) within the tree and once the possible candidate is found, format the program output well.

def resolve(pronoun, trees): pos = utils.get_pos(trees[-1], pronoun) if pos is None: print('\033[93m' + "The passed pronoun was not found in the discourse. Retry" + '\033[0m') exit(-1) pos = pos[:-1] tree = [] if pronoun in utils.pronouns: tree, pos = hobbs_algorithm(trees, pos) elif pronoun in utils.reflexive_pronouns: tree, pos = resolve_reflexive(trees, pos) if (tree, pos) != (None, None): print('\033[93m' + "\n\n======== Parsed Sentences ========\n\n" + '\033[0m') for t in trees: t.pretty_print() print('\033[92m' + "\nThe pronoun " + "\"" + pronoun + "\"" + " probably refers to:\n" + '\033[0m') tree[pos].pretty_print() return return print('\033[93m' + "No antecedent found" + '\033[0m')

Here we have the core of the algorithm whose mechanism was previously presented by the flow diagram. It takes the list of sentences to be searched and the position of the pronoun to be resolved. Finally, the function returns the candidate that is a tuple containing the tree and the position of the candidate

def hobbs_algorithm(sentences, pos): sentence_index = len(sentences) - 1 # Get the most recent sentence index tree, pos = utils.get_dom_np(sentences, pos) # A pronoun = utils.get_pronoun(tree, pos) path, pos = utils.walk_up_to(tree, pos, ["NP", "S", "ROOT"]) # B candidate = traverse.traverse_left(tree, pos, path, pronoun) # C while candidate == (None, None): if pos == (): # go to the previous sentence sentence_index -= 1 if sentence_index < 0: return None candidate = traverse.traverse_tree(sentences[sentence_index], pronoun) # D if candidate != (None, None): return candidate path, pos = utils.walk_up_to(tree, pos, ["NP", "S", "ROOT"]) # E if "NP" in tree[pos].label() and tree[pos].label() not in utils.nominal_labels: # F for c in tree[pos]: if isinstance(c, nltk.Tree) and c.label() in utils.nominal_labels: if utils.get_pos(tree, c) not in path and match.match(tree, pos, pronoun): candidate = (tree, pos) if candidate != (None, None): return candidate candidate = traverse.traverse_left(tree, pos, path, pronoun, check=0) # G if candidate != (None, None): return candidate if tree[pos].label() in ["S"]: # H candidate = traverse.traverse_right(tree, pos, path, pronoun) if candidate != (None, None): return candidate return candidate

This function is used in the previous one and its inputs are the tree and the pronoun. If a NP node is found and it agrees with the the properties of the antecedent-anaphor pair it return the candidate, otherwise if no antecedent is found it returns None.

def traverse_tree(tree, pro): # Initialize a queue and enqueue the root of the tree queue = Queue.Queue() queue.put(tree) while not queue.empty(): node = queue.get() # if the node is an NP, return it as a potential antecedent if "NP" in node.label() and match.match(tree, utils.get_pos(tree, node), pro): return tree, utils.get_pos(tree, node) for child in node: if isinstance(child, nltk.Tree): queue.put(child) # if no antecedent is found, return None return None, None

In this traverse function, the check parameter indicates whether (check=1) the function before return the node found must examine that it has not an NP or S node between it and the position of the root's subtree to be resolved. Otherwise, (check=0) it doesn't care and must return any NP node encountered as the antecedent.

def traverse_left(tree, pos, path, pro, check=1): # get the results of breadth first search of the subtree # iterate over them breadth_first = bft(tree[pos]) # convert the treepositions of the subtree rooted at pos # to their equivalents in the whole tree bf_pos = [utils.get_pos(tree, node) for node in breadth_first] if check == 1: for p in bf_pos: if p < path[0] and p not in path: if "NP" in tree[p].label() and match.match(tree, p, pro): if check_for_intervening_np(tree, pos, p, pro): return tree, p elif check == 0: for p in bf_pos: if p < path[0] and p not in path: if "NP" in tree[p].label() and match.match(tree, p, pro): return tree, p return None, None

The last type of traverse function visits the tree to the right.

def traverse_right(tree, pos, path, pro): breadth_first = bft(tree[pos]) bf_pos = [utils.get_pos(tree, node) for node in breadth_first] for p in bf_pos: if p > path[0] and p not in path: if "NP" in tree[p].label() or tree[p].label() == "S": if "NP" in tree[p].label() and tree[p].label() not in utils.nominal_labels: if match.match(tree, p, pro): return tree, p return None, None

The following function is used to climb the tree until a node that is included in the target nodes is encountered. The “targets” parameter is an array of strings, each of them corresponds to the node label in the Treebank tree. It returns the pair path and position.

def walk_up_to(tree, pos, targets): path = [pos] still_looking = True while still_looking: # climb one level up the tree by removing the last element # from the current tree position pos = pos[:-1] path.append(pos) # if an S node is encountered, return the path and pos if tree[pos].label() in targets: still_looking = False return path, pos

The next function simply finds the position of the parent NP node that contains the pronoun.

def get_dom_np(sents, pos): # start with the last tree in sents tree = sents[-1] # get the NP's position by removing the last element from # the pronoun's dom_pos = pos[:-1] return tree, dom_pos

The function below, returns the tree position of the node that is equals to the node that we want to search. A tree position is a set of integers (starting from 0) that corresponds to the branch index starting from the left (0 corresponds to the leftmost branch….N is the rightmost branch) and they are ordered from the root of the tree to its leaves.

def get_pos(tree, node): for pos in tree.treepositions(): if tree[pos] == node: return pos return None

This function is used to check whether the pair [current node]-[pronoun to be resolved] agrees to the number and gender agreement. Returns a boolean.

def match(tree, pos, pro): return number_match(tree, pos, pro) and gender_match(tree, pos, pro)

This method check if the proposed antecedent match in terms of number agreement simply verifying if is present inside the map of pronoun-number.

def number_match(tree, pos, pro): for c in tree[pos]: if isinstance(c, nltk.Tree) and c.label() in utils.nominal_labels: if utils.pronoun_numbers[c.label()] == utils.pronoun_numbers[pro]: return True return False

The following function takes the current candidate and the pronoun to be resolved and checks if they match in terms of gender agreement. The names are taken from the nltk corpus.

Inside the main loop is checked only the mismatches between singular proper name antecedents and singular pronouns.

def gender_match(tree, pos, pro): male_names = (name.lower() for name in names.words('male.txt')) female_names = (name.lower() for name in names.words('female.txt')) male_pronouns = utils.male_p female_pronouns = utils.female_p neuter_pronouns = utils.neuter_p for c in tree[pos]: if isinstance(c, nltk.Tree) and c.label() in utils.nominal_labels: # If the proposed antecedent is a recognized male name, # but the pronoun being resolved is either female or # neuter, they don't match if c.leaves()[0].lower() in male_names: if pro in female_pronouns: return False elif pro in neuter_pronouns: return False elif c.leaves()[0].lower() in female_names: if pro in male_pronouns: return False elif pro in neuter_pronouns: return False # If the proposed antecedent is a numeral, but the # pronoun being resolved is not neuter, they don't match elif c.leaves()[0].isdigit(): if pro in male_pronouns: return False elif pro in female_pronouns: return False return True

To resolve reflexive pronouns, the resolve() calls this function where starting from the np parent node that contains the pronoun to be resolved it goes back to the first S node and searchs for the matching antecedent candidate.

def resolve_reflexive(sents, pos): tree, pos = utils.get_dom_np(sents, pos) pro = utils.get_pronoun(tree, pos) path, pos = utils.walk_up_to(tree, pos, ["S"]) return traverse.traverse_tree(tree, pro)

This function counts the NP nodes:

def count_np_nodes(tree): if not isinstance(tree, nltk.Tree): return 0 elif "NP" in tree.label() and tree.label() not in utils.nominal_labels: return 1 + sum(count_np_nodes(c) for c in tree) else: return sum(count_np_nodes(c) for c in tree)

This function check whether subtree rooted at current position contains at least 3 NP nodes, one of which is:

- not the candidate

- not the pronoum

- greater than the candidate

It returns true if there is an NP between the proposal and the pronoum to be resolverd, false otherwise.

def check_for_intervening_np(tree, pos, proposal, pro): bf = bft(tree[pos]) bf_pos = [utils.get_pos(tree, node) for node in bf] if count_np_nodes(tree[pos]) >= 3: for node_pos in bf_pos: if "NP" in tree[node_pos].label() \ and tree[node_pos].label() not in utils.nominal_labels: if node_pos != proposal and node_pos != utils.get_pos(tree, pro): if node_pos < proposal: return True return False

Here it is implemented a Breadth First Search of the tree and it returns a list of the nodes in left to right level order.

def bft(tree): lst = [] queue = Queue.Queue() queue.put(tree) while not queue.empty(): node = queue.get() lst.append(node) for child in node: if isinstance(child, nltk.Tree): queue.put(child) return lst

Limitations

This algorithm was originally implemented for the English language. Not all languages have parsers. Parsers are not always accurate. In this algorithm the accuracy depends a lot on the parsed sentence.

Discourse Coherence

In a language, a discourse coherence means that the relationships between its sentences are able to generate real discourses instead of simple random assemblages of sentences. We can judge and measure the degree of coherence of a speech through the following theories::

- The entity-based coherence: being “about” some entity. This is evaluated by the Centering Theory (Grosz et al. 1995)

- The coherence relation between 2 or more spans in the text. This is analyzed by the Rhetorical Structure Theory (William Mann and Sandra Thompson 1988)

The previous properties will be explained in detail in the next two paragraphs.

Rhetorical structure theory

David took the train from Rome to Turin. He likes sushi.

David took the train from Rome to Turin. He had to visit his parents.

We can confirm that the last text is more coherent.

How can I explain the fact that the first sentence was less coherent than the second?

Due to the strong connection between the two sentences. In fact, the second one transmit a REASON for the first one. This phenomenon is called coherence relation and can be described with the most used model of discourse organization, the Rhetorical Structure Theory (RST).

In this theory, in a text the relations between two spans which are respectively called:

- nucleus: is the unit that is more central to the writer's purpose and interpretable independently

- satellite: is less central and it is generally interpretable by means of the nucleus.

The RST uses a set of 23 rhetorical relations that can hold between spans of text within a discourse, below are a few examples of them (satellite is in bold):

- Reason: the satellite provides the reason for the action carried out in the nucleus.

- David took the train from Rome to Turin. He had to visit his parents.

- Elaboration: the satellite gives more details about the situation presented in the nucleus.

- Jim was from Turin. He always lived in the downtown.

- Evidence: has the same property of the previous one but also claims that the information is presented with the the goal of convincing the reader to accept the information provided in the nucleus.

- I think Robert wants to try to take that exam. He's been preparing it for months.

- Attribution: the satellite gives the source of attribution for an instance of reported speech in the nucleus.

- Anna told the class that there will be an exam the next week.

- List: a series of nuclei is given, without contrast or explicit comparison.

- Anna taught Maths. Robert taught History. Jim taught music

- Contrast: >=2 nuclei contrast along some important dimension.

- Here in Turin was freezing, but in Rio de Janeiro was warm.

Example

Here an excerpt from Scientific American (Marcu (2000)) is analyzed with the RST.

With its distant orbit 50% farther from the sun than Earth and slim atmospheric blanket, Mars experiences frigid weather conditions. Surface temperatures typically average about -70 degrees Fahrenheit at the equator, and can dip to -123 degrees C near the poles. Only the midday sun at tropical latitudes is warm enough to thaw ice on occasion, but any liquid water formed in this way would evaporate almost instantly because of the low atmospheric pressure. Although the atmosphere holds a small amount of water, and water-ice clouds sometimes develop, most Martian weather involves blowing dust or carbon dioxide.

Satellites

Nuclei

The leaves of the tree in below are the text intervals and are called elementary discourse units or EDU. Determining the boundaries of an EDU is a very task important for extractiong coherence relations.

Centering Theory

Idea

In a discourse there is an entity that is salient and we can consider a coherent discourse by continuing to discuss about the same entity.

Implementation

Discourses are composed by discourse segments, each of them consists of a sequence of utterances U1, U2, …, UN. Inside each utterance there are 2 possible representations:

- Cf(Un) forward looking centers: a set of potential next centers that correspond to the set of potential future salient entities (potential Cb(Un+1))

- Cb(Un) backward-looking center: except the first utterrance, the other ones will have exactly a single center defined that corresponds to the salient entity evoked by the current utterance being focused on in the discourse after the utterance is interpreted. It connects the current utterance to the previous discourse because it focuses on a entity that has already been introduced

The set of Cf(U) are ranked based on a variety of factors (e.g., grammatical role). The highest-ranked (usually the subject of the sentence) element in Cf(U) is called the preferred center Cp(U) and it corresponds to the most likely backward looking center of the next utterrance Cb(Un+1).

There are four possible intersentential relationships between Un and Un+1:

- Center continuation: the speaker/writter has continued talking about the same entity as in the previous utterance, and plans to keep doing that, this means that this entity is the most likely candidate for Cb(Un+2).

- Center retaining: the speaker is planning to shift to a new entity because the current entity is not the most highly ranked element in Cf(Un+1)

- Center shifting: the speaker shifted to a new entity. Brennan et al. (1987) distinguish between smooth-shift and rought-shift.

These 4 transitions are better described in the table below:

| Cb(Un+1) = Cb(Un) or undefined Cb(Un) | Cb(Un+1) != Cb(Un) | |

|---|---|---|

| Cb(Un+1) = Cp(Un+1) | Continue | Smooth-Shift |

| Cb(Un+1) != Cp(Un+1) | Retain | Rough-Shift |

A series of examples to better understand each transition:

Discourse stays focused on same entity:

- Pamela broke her flute.

- Cb(U1) = undefined

- Cp(U1) = Pamela

- Cf(U1) = {Pamela, flute}

- She went to a music shop to buy a new one.

- Cb(U2) = Pamela

- Cp(U2) = Pamela

- Cf(U2) = {Pamela, shop, flute}

- We have Center continuation

- She could find a good flute that was not very expensive.

- Cb(U3) = Pamela

- Cp(U3) = Pamela

- Cf(U3) = {Pamela, flute}

- We have Center continuation

Connecting sentence evoking next focus of discourse:

- Pamela broke her flute.

- Cb(U1) = undefined

- Cp(U1) = Pamela

- Cf(U1) = {Pamela, flute}

- She went to a music shop to buy a new one.

- Cb(U2) = Pamela

- Cp(U2) = Pamela

- Cf(U2) = {Pamela, shop, flute}

- We have Center continuation

- It was closing just as Pamela arrived.

- Cb(U3) = Pamela

- Cp(U3) = shop

- Cf(U3) = {shop, Pamela}

- We have Center retaining

Predictable change in focus:

- Pamela broke her flute.

- Cb(U1) = undefined

- Cp(U1) = Pamela

- Cf(U1) = {Pamela, flute}

- She went to a music shop to buy a new one.

- Cb(U2) = Pamela

- Cp(U2) = Pamela

- Cf(U2) = {Pamela, shop, flute}

- We have Center continuation

- It was about to close for the day.

- Cb(U3) = Pamela

- Cp(U3) = shop

- Cf(U3) = {shop, day}

- We have Center retaining

- It was her favourite shop in the world.

- Cb(U4) = shop

- Cp(U4) = shop

- Cf(U4) = {shop, Pamela, world}

- We have a smooth center shift

Change in discourse focus without smooth transition:

- Pamela chose a good flute that was not very expensive in the shop.

- Cb(U1) = undefined

- Cp(U1) = Pamela

- Cf(U1) = {Pamela, shop}

- It always allows customers to try out the musical instruments before buying them

- Cb(U2) = Pamela

- Cp(U2) = shop

- Cf(U2) = {shop, customers, musical instruments}

- We have Center retaining

- Francesco visited it just as she left.

- Cb(U3) = shop

- Cp(U3) = Francesco

- Cf(U3) = {Francesco, shop, Pamela}

- We have a rough center shift

Limitations of CT

This centering model only accounts for local coherence of discourse and not for the global case.

Applications of Discourse Processing

In the Anaphora Resolution context the indentification of anaphoric item is very important in a huge number of NLP applications such as:

- machine translation

- question answering

- information extraction

Anaphora Resolution is also important inside the text summarization application since techniques for extracting important sentences are more accurate if anaphoric references are taken into account as well.

The main important application of RST is to produce coherent summaries. Since RST states that the understanding of nuclei doesn't depend on his satellites: consequently the idea is that a summary can be generated from the rethorical structure tree by keeping only the nuclei and removing satellites.

This approach require to correctly determine the type of span and the relations between them.

The most popular application of the Centering Theory has been in anaphora resolution algorithms and to evaluate summaries. The method of the last one consists in assigning scores to summaries on the basis of a preferred ordering of transitions which occur between utterances within them.

Steps to execute the Hobbs's Pronoun Resolution Algorithm

Step 1

Download the code:

If you don't want to build the Treebank tree for each sentence every time, it is highly recommended to use the StanfordCoreNLParser.

Right mouse-click and click Save. Your browser will probably block the download due to security problems but just allow the download:

Once downloaded, unzip it and enter into the extracted folder, example:

cd stanford-corenlp-full-2018-02-27

Make sure you have java installed on your operating system and start the server from a terminal, and keep it alive for the duration of the execution of the project (so don't close the terminal). If you have Windows, an alert of Windows Defender Firewall will probably ask you to allow access to app features. Just allow it.

Make sure no processes are listening on port 9000 otherwise stop them otherwise choose other port. Run:

java -mx4g -cp "*" edu.stanford.nlp.pipeline.StanfordCoreNLPServer \ -preload tokenize,ssplit,pos,lemma,ner,parse,depparse \ -status_port 9000 -port 9000 -timeout 15000 &

Step 2

MacOS and Linux ship with Python3 pre-installed, for Windows you can install python3 from the official website: Official Python website

- Remember to check “Add Python 3.X to PATH” during the installation

Install pip3: The latest python3 installers for Windows install pip3 automatically. For MacOS and Linux, from terminal launch:

sudo apt-get update sudo apt-get -y install python3-pip

Install required dependencies:

[Windows/MacOs/Linux]> pip3 install -U nltk requests

From Terminal open the python3 CLI:

[MacOs/Linux]> python3 [Windows]> py

Once inside the CLI, launch:

[Windows/MacOs/Linux]

>> import nltk

>> nltk.download('names')

Step 3

From the project folder launch the main script but don't forget that YOU MUST enter the correct option parameters to tell the program:

How parsing will be processed:

- -c (or –core-nlp) to use the StanfordCoreNLParser

- -t (or –treebank-tag) if you want to use your custom Treebank tree for each sentence instead of normal strings.

[Only if you choose -c option] You must also choose one of the following options, to specify where the sentences will be read from:

- -f (or –file) indicates the name of the file where the phrases to be solved are stored

- -s (or –string) indicates that the simple sentence will be passed directly as a parameter

For instance: Choosing the StanfordCoreNLParser and the file where the sentences are saved:

[MacOs/Linux]> python3 main.py -c -f ./sentences/sent1_notag.txt "him" [Windows]> python.exe .\main.py -c -f .\sentences\sent1_notag.txt "him"

Choosing the StanfordCoreNLParser and the sentence passed directly as a parameter:

[MacOs/Linux]> python3 main.py -c -s "Mary went out with John. She likes him" "him" [Windows]> python.exe .\main.py -c -s "Mary went out with John. She likes him" "him"

Choosing your custom Treebank trees for each sentence:

[MacOs/Linux]> python3 main.py -t ./sentences/sent4.txt "herself" [Windows]> python.exe .\main.py -t ./sentences/sent4.txt "herself"