Next: Support Vector Up: Catastrophic Forgetting Previous: Catastrophic Forgetting

A solution, called Conservative Training (CT), is now proposed to mitigate the forgetting problem. Since the Back-Propagation technique used for MLP training is discriminative, the units for which no observations are available in the adaptation set will have zero as a target value for all the adaptation samples. Thus, during adaptation, the weights of the MLP will be biased to favor the output activations of the units with samples in the adaptation set and to weaken the other units, which will always have a posterior probability getting closer to zero. Conservative Training does not set to zero the value of the targets of the missing units; it uses instead the outputs computed by the original network as target values. Regularization as proposed in [10] is another solution to the forgetting problem. Regularization has theoretical justifications and affects all the ANN outputs by constraining the network weight variations. Unfortunately, regularization does not directly address the problem of classes that do not appear in the adaptation set. We tested the regularization approach in a preliminary set of experiments, obtaining minor improvements. Furthermore, we found difficult to tune a single regularization parameter that could perform the adaptation avoiding catastrophic forgetting. Conservative Training, on the contrary, takes explicitly all the output units into account, by providing target values that are estimated by the original ANN model using samples of units available in the adaptation set.

Let ![]() be the set of phonetic units included in the adaptation set (

be the set of phonetic units included in the adaptation set (![]() indicates presence), and let

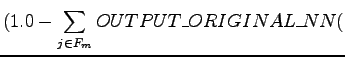

indicates presence), and let ![]() be the set of the missing units. In Conservative Training the target values are assigned as follows:

be the set of the missing units. In Conservative Training the target values are assigned as follows:

| (6) | |||

| (7) | |||

|

(8) | ||

| (9) |

where

![]() is the target value associated to the input pattern

is the target value associated to the input pattern ![]() for a unit

for a unit ![]() that is present in the adaptation set.

that is present in the adaptation set.

![]() is a target value associated to the input pattern

is a target value associated to the input pattern ![]() for a unit not present in the adaptation set.

for a unit not present in the adaptation set.

![]() is the output of the original network (before adaptation) for the phonetic unit i given the input pattern

is the output of the original network (before adaptation) for the phonetic unit i given the input pattern ![]() , and

, and

![]() is a predicate which is true if the phonetic unit

is a predicate which is true if the phonetic unit ![]() is the correct class for the input pattern

is the correct class for the input pattern ![]() .

Thus, a phonetic unit that is missing in the adaptation set will keep the value that it would have had with the original un-adapted network, rather than obtaining a zero target value for each input pattern.

This policy, like many other target assignment policies, is not optimal. Nevertheless, it has the advantage of being applicable in practice to large and very large vocabulary ASR systems using information from the adaptation environment, and avoiding the destruction of the class boundaries of missing classes.

It is worth noting that in badly mismatched training and adaptation conditions, for example in some environmental adaptation tasks, acoustically mismatched adaptation samples may produce unpredictable activations in the target network. This is a real problem for all adaptation approaches: if the adaptation data are scarce and they have largely different characteristics - SNR, channel, speaker age, etc. - other normalization techniques have to be used for transforming the input patterns to a domain similar to the original acoustic space.

Although different strategies of target assignment can be devised, the experiments reported in the next sections have been performed using only this approach. Possible variations, within the same framework, include the fuzzy definition of missing classes and the interpolation of the original network output with the standard 0/1 targets.

.

Thus, a phonetic unit that is missing in the adaptation set will keep the value that it would have had with the original un-adapted network, rather than obtaining a zero target value for each input pattern.

This policy, like many other target assignment policies, is not optimal. Nevertheless, it has the advantage of being applicable in practice to large and very large vocabulary ASR systems using information from the adaptation environment, and avoiding the destruction of the class boundaries of missing classes.

It is worth noting that in badly mismatched training and adaptation conditions, for example in some environmental adaptation tasks, acoustically mismatched adaptation samples may produce unpredictable activations in the target network. This is a real problem for all adaptation approaches: if the adaptation data are scarce and they have largely different characteristics - SNR, channel, speaker age, etc. - other normalization techniques have to be used for transforming the input patterns to a domain similar to the original acoustic space.

Although different strategies of target assignment can be devised, the experiments reported in the next sections have been performed using only this approach. Possible variations, within the same framework, include the fuzzy definition of missing classes and the interpolation of the original network output with the standard 0/1 targets.

Stefano Scanzio 2007-10-24