Another way to avoid catastrophic forgetting are Support Vectors (SV). Support Vectors are those patterns that are in the boundary between classes, they can be seen as the representatives of the classes. Support Vectors represent the decision boundaries of a classifier.

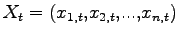

Let

the input patterns at time

the input patterns at time  and

and  the related target at time

the related target at time  . A model

. A model  is trainined with a set of couple

is trainined with a set of couple

. Since the model depends on the training patterns

. Since the model depends on the training patterns  and on other parameters

and on other parameters  (like learning rate, momentum,...), we refer to the model as

(like learning rate, momentum,...), we refer to the model as  . Support vectors are patterns with an undefined model responce.

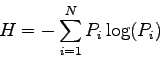

A good indicator of uncertainty is entropy:

. Support vectors are patterns with an undefined model responce.

A good indicator of uncertainty is entropy:

|

(10) |

where  in (1.10) is the posterior probability of the model

in (1.10) is the posterior probability of the model  and

and  represents the number of outputs of the model.

If the output vector has one

represents the number of outputs of the model.

If the output vector has one  and each others

and each others  the entropy is minimal,

the entropy is minimal,  . Otherwise

. Otherwise  if all the outputs are equal to

if all the outputs are equal to  , where

, where  is the number of network outputs. In this case the model

is the number of network outputs. In this case the model  has the highest uncertainty.

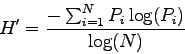

In order to normalize the entropy compared with the length of the output vector, a normalized entropy is used:

has the highest uncertainty.

In order to normalize the entropy compared with the length of the output vector, a normalized entropy is used:

|

(11) |

Stefano Scanzio

2007-10-24

![]() the input patterns at time

the input patterns at time ![]() and

and ![]() the related target at time

the related target at time ![]() . A model

. A model ![]() is trainined with a set of couple

is trainined with a set of couple

![]() . Since the model depends on the training patterns

. Since the model depends on the training patterns ![]() and on other parameters

and on other parameters ![]() (like learning rate, momentum,...), we refer to the model as

(like learning rate, momentum,...), we refer to the model as ![]() . Support vectors are patterns with an undefined model responce.

A good indicator of uncertainty is entropy:

. Support vectors are patterns with an undefined model responce.

A good indicator of uncertainty is entropy: